Just completed the storage migration from VMAX to PMAX 8000 and 8500 before Christmas. NDM is not an option because NDM no longer supports Solaris. Besides, there are a number of busy databases running in both test and production. Before cutover to PMAX occurs, the SRDF directors between VMAX and PMAX will be the bottleneck. Also, we do not have any extra director left to config for SRDF traffic.

Our plan is to use Storage vMotion for all VMDK. The RDMs are for SQL DB running in ESX. For the RDM, we use SRDF/S to start replication to PMAX in the background. Because there is no SRDF supported from VMAX to PMAX 8500, all luns migration using SRDF will be replicated to PMAX 8000. Then, app owner will pick a downtime to shutdown the app and the servers. We will stop the sync after confirming no outstanding tracks. Remove the VMAX luns from the initiator group then add the PMAX luns.

For Solaris, if it is Oracle DB, same size or larger luns will be added to Oracle. Then DBA will complete the balancing and drop the old VMAX luns. For the boot luns and luns from other app, some of our Unix admin will use SRDF/S to migrate them to PMAX 8000. Some decide to do host base migration. For host base migration, we just provide a lun of same size or larger to the Unix admin from PMAX 8500.

Below summarizes the general steps for the storage migration from VMAX to PMAX 8000 using SRDF/S. Pls check your environment and test to see if additional steps are required. If Volume Manager is used, migration should be complete with Volume Manager rather than SRDF.

1) Change the source luns attribute in VMAX to dyn_rdf.

2) setup SRDF/S pair from VMAX to PowerMax 8000 (put the target lun in temp target_SG)

3) during downtime, shutdown the apps and servers

4) Confirm no outstanding tracks.

5) Perform SRDF split.

6) Remove source lun from VMAX storage group

7) Add the target luns to SG in PowerMax and remove from temp target_SG.

8) Host team completes lun mapping

9) Delete SRDF pair with force option

10) Unset GCM bit if required (symdev -sid xxx -devs xxx unset -gcm)

11) Host team can perform rescan if setp 10 is required. (They should see about 1MB more space for luns in step 10)

12) Power up the servers to validate

That way, they can always go back to the VMAX luns if backout is required.

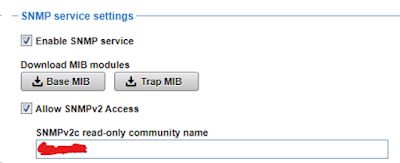

Note: in the past, if the source / target luns are not mapped to FE ports, sometimes will see some strange results on some of the SRDF operation. So, I create a temp target_SG with no HBA in the IG for the target_SG's masking view.