With DCNM reaching EOL in Apr 2026, the only option left is to upgrade to NDFC. Some site may decide to run with DCNM until the whole infrastructure is migrated to cloud. Because we are still refreshing old MDS 9700 switches, migrating to NDFC is a must. The main issue with firmware upgrade for existing switch is smart license. We manage no more than 10 MDS FC switches at a time. So, setup is kind of simple. Still, it is more complicated compared to DCNM.

It looks like 9.2(1a) is the last version of firmware in MDS 9000 supporting legacy license / license file. If you upgrade existing switch running older version of firmware to 9.2.2, the switch license will be changed to smart licensing auto. Check with support. The answer I got is those existing switch upgraded to newer firmware will be in Donor mode in the worst case. There should be no impact to functionality according to support. Because some of the MDS switches licenses were purchased through 3rd party vendor, and we change to another 3rd party vendor for support later, I don't know what will happen if there is licensing issue after firmware upgrade on existing switch. So, we decide to keep existing older MDS 9700 switches with firmware 8.4(2e) until hardware refresh completes in the next 2-3 yrs. There are a few bugs impacting firmware 8.4. Confirm you have all the workarounds before deciding to stay in 8.4 firmware. If you decide to upgrade existing MDS 9700 to use smart license, check with support first.

For all the new MDS 9700, they are all shipped with firmware 9.4.x. So, smart license is enabled auto. Check with support and there is no license file any more on these new MDS 9700 switches. License is installed at the factory.

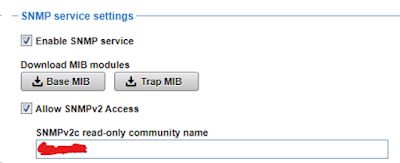

Because it is a close environment, we setup our NDFC smart licensing to offline mixed mode. Mixed mode will support existing license file in older MDS 9700 with older 8.4 firmware. We contact support to transfer existing legacy DCNM server license to smart license before upgrade. At most 365 days / switch addition or decommission, I will export the license info back to Cisco software support website and then import it back to the NDFC server licensing section.

Below are some of the Cisco Smart licensing links / doc for your information.

Cisco

MDS Smart Licensing Using Policy Data Sheet